Oh, behave! On BDD and E2E tests at Clear Street

At Clear Street, we build products and experiences that move billions of dollars every day. Under the weight of that responsibility, testing is a priority. Collaborating with the business and using a shared language when defining the tests helps us reduce risk and increase confidence in shipping quality code. BDD and E2E testing are the weapons of choice for us.

BDD (behavior-driven development) is a methodology that helps developers and business stakeholders collaborate by building a shared language for product specification, where the specification itself is testable code.

E2E testing (or end-to-end testing) relates to integration tests that are designed to test the system as whole, as opposed to unit tests, which test a single unit in isolation, typically a class or small set of code files.

There are many different flavors of BDD and E2E testing. In this blog, we’ll cover where this testing meets Clear Street, how we use these methodologies, the tooling we built around them, where they help us and where they don’t, challenges, and where we go next.

Back to the beginning

Let’s rewind 3 years to the year 2020. That was a fun year, right? The birds were chirping, and we were all stuck inside. Also that year, management decided that it was time to replace our stable-but-also-dying-inside transaction engine (named bank), with a new-and-shiny-architected-for-scale engine (named bk).

💡 This was a bold, game-changing decision by management (perhaps all that indoor time was getting to them). We’ve discussed this migration in detail, see Part 1, Part 2 & Part 3.

The old bank engine was built by our co-founder and Chief Technology Officer Sachin Kumar in the very early beginnings of the firm. The new bk engine was to be built by a new team who lacked both an understanding of the existing engine and limited prime brokerage knowledge in general.

They started by reverse-engineering the old engine, speaking with the various business experts at the firm, writing unit tests and manually testing the new engine, but this wasn’t very fruitful.

Progress was extremely slow and bugs kept creeping up (the legend goes that the entire team went completely bald from all the hair pulling).

Photo taken during our team meeting that year (source)

The team lead, Christian, decided enough was enough and started working on a solution: writing the product specification as tests in a language that both the business and engineering teams understand. He wrote a tool called drone to run those tests against the entire system.

That specification was written by engineers and the business together and was tested against both the old and new system. It proved invaluable to getting the new system shipped with great quality.

How does drone work?

drone uses a Python library called behave to run tests written in a natural language style (written using the Gherkin syntax). The tests are grouped into features, where each feature has several scenarios that define how the feature behaves.

Here’s an example of one of our scenarios:

The scenario is composed from a series of steps, where each step can be one of:

- Given - a step that configures the system to prepare the test.

- When - an action that triggers the system in some way.

- Then - an assertion that tests that something that was supposed to happen happened.

The scenario in the example sets up an instrument (a stock in this case) and an account in the system, then buys 5 shares for that stock in the account. It next tests that the trade was created and ledgers were updated correctly. Then, it rolls over the entire system 2 days forward and tests that the trade was settled and ledgers were updated correctly again.

Each of these steps is matched to actual Python code that talks to various components in the system to make this work behind the scenes. For example, the step to create an account is defined like this:

Python

@given(

'I have an account "{account_name)" of type {account_type:AccountType}, with customer ''

type {customer_type:CustomerType), and margin type "{margin_type :MarginType}"'

)

@async_step

async def creates_account_with_given_types(

context: Context,

account_name: str,

account_type: proto. AccountType,

customer_type: Optional [proto. Customer Type],

margin_type: Optional [proto. MarginType],

) -> None:

"""

Creates an account with the given account type, customer type and margin type and injects it into the context

:param account_name: Account name for the account to be created

:param account_type: type of account to create param customer_type: type of customer

:param margin_type: type of margin for this account

:example: Given I have an account "Test Account" of type pab, with customer type dvp, and margin type none

:example_description: Results in one account that can be referenced as Test Account' in lieu of an account_id,

these are available in the drone context. This account will be of the given types.

Note that the combination of

types must be a valid combination or this step will fail.

"""

await _create_account(

context=context,

account_name=account_name,

account_type=account_type,

customer_type=customer_type,

margin_type=margin_type,

)behave searches all of the step definitions to find a step that matches the text, then parses the arguments (with the help of custom defined parsers we defined for special types) and calls this function, which is standard Python code.

Continuous Integration (CI)

We were running this manually from the command-line for some time before we had the time to improve on our testing story. We wanted those tests to run automatically each day or with a button click on a specific pull request (we didn’t want to run automatically on a pull request, more on that later), so that we’ll see breaks early on and fix them quickly, fastening our feedback loop, and increasing confidence in our releases.

To build, we relied on Ivy, a service to deploy an ephemeral development environment with local kubernetes, which was developed by our wonderful infrastructures team.

💡 Read more about Ivy in this blog post.

We deploy our main development branch for the daily tests, or a specific branch for testing a pull request. We start all of the services we need to run the tests, including our drone server. We then send a request to the drone server to run all scenarios which are tagged with the service we’re testing (the example scenario from above is tagged as bk.business_requirements, meaning it’s a scenario that’s part of the bk test suite).

We started with just bk, but quickly we wanted to add this to other services which our team owns and also wanted to increase adoption with other teams in the firm.

So, we built three Python libraries to help us making setting up e2e tests for a service a pleasant experience:

- libivy - a library that speaks with the ivy API to create branch deploys and bring up services.

- libdrone - a library that speaks with the drone server API to run scenarios, wait for them to finish and return the results.

- libe2e - a higher-level library that uses libivy and libdrone to create branch deploys, bring up services, wait for them, run the scenarios, wait for the results and post it to slack.

Here’s how we use libe2e to run the e2e tests for bk:

Python

from clearstreet import libe2e

config = libe2e. Config(

service_name="bk",

slack_channel="csc-cs-e2e",

parallelism=2,

service_dependencies=[

"proxygate",

"bkfacade",

"appliances",

"tradesuite",

# ... more services here

],

wait_for_connectors= [

libe2e. Connector ("apolloconnector", "postgres12-bk"),

libe2e. Connector ("connector", "micro-connector-up"),

],

local_dependencies=[

"bktxprocessor",

"bkgate",

"bkgatekafka",

"bkgatefile",

#... more services here

],

drone_tags= [

"bk. business_requirements",

"bk. technical_requirements",

],

additional_tilt_args=[

"--env",

"chrono. CHRONO_CHECKINS=bkcoordinator",

"--env",

"bkfacade. BKFACADE_MODE=local-only",

],

)

def main():

libe2e. run (config)

if __name__ == "__main__":

main() Notable details on the configuration include:

- parallelism here defines how many branch deploys we create. Here we create 2 branch deploys and we divide up the scenarios to 2 and run them in parallel in 2 different environments to save on time.

- service_dependencies defines all of the services we need to start up for our tests, and local_dependencies defines which of those services should use the local code from the branch. The services which are not local are the services which we don’t intend to test specifically, for those we use the latest pre-built images from the development branch so we save time on building those.

- additional_tilt_args defines additional configurations we pass to the services (like setting up certain feature flags), it’s called tilt because our local kubernetes environment is built on tilt.

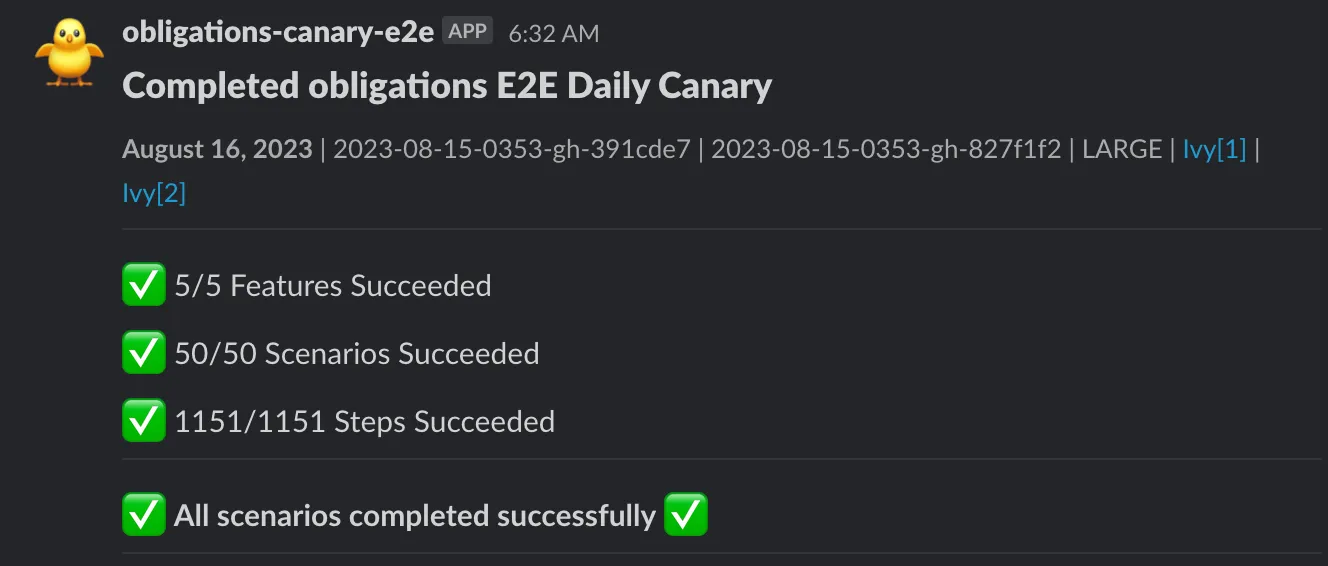

Here’s what a successful e2e run looks like on Slack (in this example, for our obligations service):

And here’s a bad one to compare:

We have an on-call person designated to look into any failures that arise and fix them. And for our more critical services like bk, we do not deploy to production unless we have a successful e2e run for the artifact we want to deploy.

Drone UI

Following our continuous integration setup, we wanted to provide a UI to make the experience even better. This aimed to help with both running the tests locally (using CLI or the API was cumbersome), and tracking the test results, both locally and for CI failures.

Here’s how the UI looks before we run anything:

We select scenarios to run using the left panels, either by using the “Features” view or the “Tags” view. Here we chose all of the scenarios for the custody team and a single scenario from the bk.business_requirements tag. We can see this results in 95 scenarios in the top button which we can click to run the scenarios.

Once we run a set of scenarios, we’ll see a session in the top left panel which we can click to track the test live as it runs:

If we have an error we can drill down into the scenario to see which step failed, and with what error:

For tests that run using continuous integration, if there’s a failure we keep the environment alive, and you can land into drone UI straight from the slack link to troubleshoot the error.

Challenges

Not everything is rosy, of course. Here are some the challenges we are facing, and maybe this is not a perfect fit for all use cases:

- High maintenance: As with all integration tests that involve a lot of components, there’s a lot of flakiness involved. Breaking api changes in components, unrelated failures, one scenario changing state for another scenario, a lot of false positives, you name it, e2e tests break a lot. It’s a side effect of a very complex system. Keeping this all afloat requires needed, ongoing investment. Often, we are adding retries, fixing scenarios to make them more isolated, and handling edge cases. We decided that the investment is worth it for us - safety is very important for a financial application like ours - but it might not be worth it for other systems with less strict requirements.

- Slowness: Running the tests is slow/ Bringing up the environment takes about 20-25 minutes before we even start running the tests. This, combined with the flakiness outlined in the previous section, is why we don’t want to run this automatically for every pull request.

- Code hygiene: by the time of this writing, drone has been adopted in some fashion by 6 teams, that’s a lot of engineers contributing code to the project. It’s hard to maintain consistency, for example you can see multiple step definitions which are worded differently but do pretty much the same thing. Managing all of the dependencies on external python packages is a challenge in itself.

Future improvements

We are far from done, and there are a lot of avenues to improve in the future. Here’s a partial list:

- Improve performance: How can we make the tests faster? One thought would be creating a pool of ivy environments on standby which the e2e tests can pick up and save the startup costs. Another is to build lightweight versions of each service that contain most of the business logic but use lighter resources such as in-memory storage instead of full databases, for example.

- Increase adoption: How can we encourage more teams in the firm to adopt drone? For example, we have a lot of code that uses Snowflake, a database in the cloud that is tricky to integrate into our local dev environments in an isolated way. Other teams want to be able to mock parts of the system and not bring everything up each time.

- Improve user experience: For example, to view logs, developers currently need to go to the Tilt UI or grafana. Ideally, the logs would link directly from the failed scenario in the drone UI. Another pressing issue is that when an engineer wants to run a scenario that they don’t know locally, it’s not always simple to know which services you need to bring up for this to work. Wouldn’t it be nice to have the feature or scenario define the services it needs and have drone bring those up for you automatically?

- Extend our tooling to more testing needs: We currently focus on correctness, but we’d also like to find performance regressions (performance is a feature!), do chaos tests, and stress tests to test the system under extreme conditions. Can we extend drone for this, or do we need additional tooling?

- Going open source: We would love for this to be open source. The challenge is that currently our specific business code is tied into the code that others could use. How do we make pleasant abstractions and package a web server that reads another user’s scenarios and runs their code?

Clear Street 💗 tests

Using BDD and E2E tests greatly help us ship quality code. Benefits include:

- Reducing risk: E2E tests help us discover tricky bugs that unit tests can’t, and have already saved us from shipping accidentally dangerous code on multiple occasions, thanks E2E tests!

- Increasing confidence: If an E2E test run fails in CI, we don’t ship that code! When all of the e2e tests pass, we can ship with confidence that the new code does not break old behaviors.

- Improving communications: When a Clear Street engineer develops a new feature, they write the scenarios and share with the business stakeholders before development even starts. This common understanding helps avoid many communication breaks in the development process.

- Documentation: When a new engineer or business stakeholder joins a project, reviewing the existing scenarios can be of great help in understanding its function.

All in all, we highly recommend using BDD to create E2E tests defined with a shared language for projects where correctness is critical and the business language is complicated.

We are far from done, but very proud of what we achieved so far.

Get in touch with our team

Contact usClear Street does not provide investment, legal, regulatory, tax, or compliance advice. Consult professionals in these fields to address your specific circumstances. These materials are: (i) solely an overview of Clear Street’s products and services; (ii) provided for informational purposes only; and (iii) subject to change without notice or obligation to replace any information contained therein. Products and services are offered by Clear Street LLC as a Broker Dealer member FINRA and SIPC and a Futures Commission Merchant registered with the CFTC and member of NFA. Additional information about Clear Street is available on FINRA BrokerCheck, including its Customer Relationship Summary and NFA BASIC | NFA (futures.org). Copyright © 2024 Clear Street LLC. All rights reserved. Clear Street and the Shield Logo are Registered Trademarks of Clear Street LLC